According to foreign media reports, large technology companies such as Google and Meta are launching a counterattack against NVIDIA (NVIDIA), a GPU manufacturer that focuses on the position of artificial intelligence (AI) chip market. As these companies accelerate independent chip development to reduce their dependence on NVIDIA chips, they predict the shipment of customized chips (ASICs) by large technology companies will surpass NVIDIA's AI GPUs as early as 2026.

According to reports from Korea's North Korean Daily, ASIC is a chip that focuses on specific AI services, not the versatility of AI GPUs. It only hardware optimizations for necessary computing, so it has higher power efficiency and significantly saves costs compared to GPUs. According to Japan Nomura Securities' 27th report, Google's own AI chip TPU is expected to ship 1.5 million to 2 million in 2025, and the ASIC chip shipment volume of Amazon's Internet Services (AWS) is expected to reach 1.4 million to 1.5 million. Total, the combined shipments of the two companies are close to half of NVIDIA's annual AI GPU supply (about 5 million to 6 million).

As for 2026, with the active addition of other technological giants such as Meta, the total shipment of ASIC AI chips is expected to exceed NVIDIA's AI GPUs. At present, NVIDIA accounts for more than 80% of the AI server market, making the proportion of servers that use ASIC chips still only 8%~11%. However, judging from the number of chip shipments, the market pattern is changing rapidly.

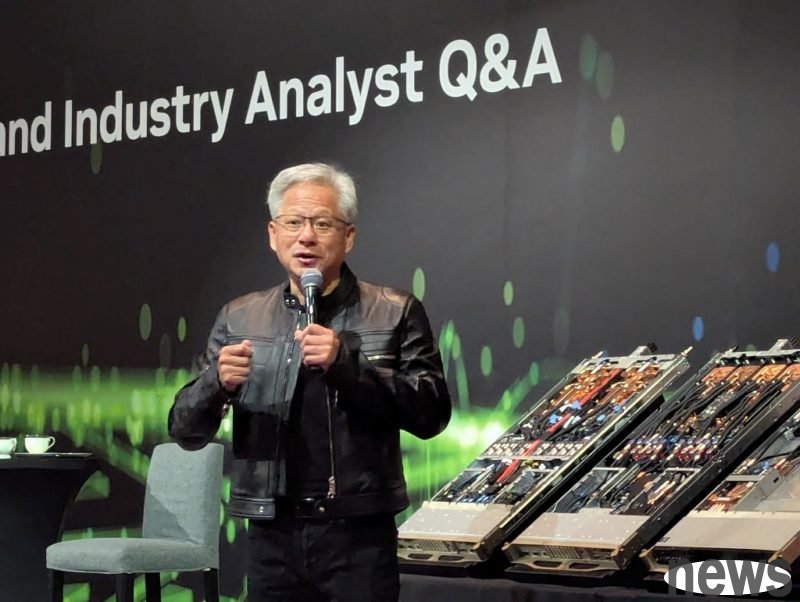

The reason why large technology companies are accelerating independent chip development is that they hope to eliminate the high-cost structure called "NVIDIA tax". At an event this month, NVIDIA executive chief Huang Rensheng emphasized the company's advantages of AI chips, and said that if ASICs are not better than buying, why do you have to do it yourself? However, large tech companies are stepping up their investment in autonomous chips to ensure long-term cost-effectiveness and supply stability.

The biggest advantage of large tech companies paying attention to ASIC chips is the reduction in total cost of ownership (TCO). Industry analysis shows that ASICs can achieve a TCO reduction of 30% to 50% compared to equivalent AI GPUs. It can not only significantly reduce the purchase cost of GPU chips, but also significantly reduce operating costs including power consumption. In fact, Google says TPUs have three times more power consumption than NVIDIA's AI GPUs. In addition, if you use internally designed chips, you can purchase chips stably according to your own service plan without being affected by external variables.At present, Google is the first company to independently develop AI chips. Google first released its AI-specific chip TPU in 2016 and applied it to its own services. Later, most large technology companies including Meta, Amazon and Microsoft have been actively engaged in the development of ASIC chips. According to JPMorgan's estimates, the global AI ASIC chip market size will reach about US$30 billion in 2025, and the average annual growth rate is expected to exceed 30% in the future.

Instructions emphasize that Meta plans to release the next generation of high-performance ASIC chip MTIA T-V1 designed by Broadcom in the fourth quarter of 2025. The chip aims to surpass NVIDIA's next-generation Rubin architecture AI GPU. In mid-2026, the MTIA T-V1.5, which doubled the chip surface, will be launched, and in 2027, the MTIA T-V2, which has improved performance inversely, will consume a server, which is comparable to the power consumption of 50 ordinary households (170kW). However, Meta's plan also faces actual challenges. Meta aims to ship 1 million to 1.5 million ASICs by the end of 2025 and by 2026, but TEU's current advanced packaging (CoWoS) production capacity is only about 300,000 to 400,000, so supplying bottles is difficult.

Compared with ASIC's step-by-step approach, NVIDIA is not showing any weakness. It recently opened its own NVLink protocol to the outside world, allowing third-party central processors (CPUs) or ASICs to easily connect to NVIDIA GPUs. This is interpreted as a strategy to prevent large tech companies from exiting completely and encourage them to stay within the NVIDIA ecosystem. In addition, the accumulated software ecosystem CUDA has been considered a unique weapon of NVIDIA, and large technology companies cannot defeat ASIC AI chips in the short term. Relevant semiconductor market sources said that when AI removes NVIDIA's framework and runs ASIC AI chips that optimize each service, consumers will gain faster and more detailed experience. Moreover, with the acceleration of AI service innovation, large technology companies' reduction in NVIDIA's dependence is an inevitable process for AI industry to mature.